[ad_1]

As instruments based mostly on machine studying and AI have appeared, most lately Adobe’s Generative Fill function in Photoshop, photographers appear to bounce between embracing the know-how as a brand new artistic device and rejecting the intrusion of “AI” right into a pursuit that values picture authenticity and real-world expertise.

However whereas generative AI has stolen all the eye recently, machine studying has lengthy maintained a foothold within the images discipline. Listed here are 5 areas the place you’re most likely already benefiting from machine studying, even when you’re not conscious of it.

Digital camera autofocus

Mirrorless cameras profit enormously from machine studying and are educated to acknowledge every part from folks to airplanes.

Your digital camera typically surveys the scene in an uncomprehending method, both trying to find distinction or analyzing totally different views on the scene to evaluate how misaligned they’re. It drives the lens till this distinction is maximized or the 2 views come into alignment. Relying on the AF mode, the processor is commonly evaluating a number of focus factors or relying in your help to specify a spotlight space.

Nonetheless, the autofocus system is aware of nothing in regards to the scene. More and more, there is a separate course of happening in parallel. Based mostly on machine studying fashions, the processor can also be attempting to interpret the scene in entrance of the digital camera and establish topics (reminiscent of faces or objects) within the scene.

We’ve seen this for some time on cameras that function face and eye detection for specializing in folks. Even when the main target goal is positioned elsewhere within the body, if the digital camera acknowledges a face – and by extension, eyes – the main target level locks there. As machine studying algorithms have improved and processors have develop into quicker at evaluating the photographs relayed from the sensor, newer cameras can now establish different particular objects reminiscent of birds, animals, cars, planes, and even digital camera drones. Some digital camera techniques, such because the Canon EOS R3 and the Sony a7R V, can acknowledge particular folks and give attention to them after they’re within the body.

Each smartphone picture you seize

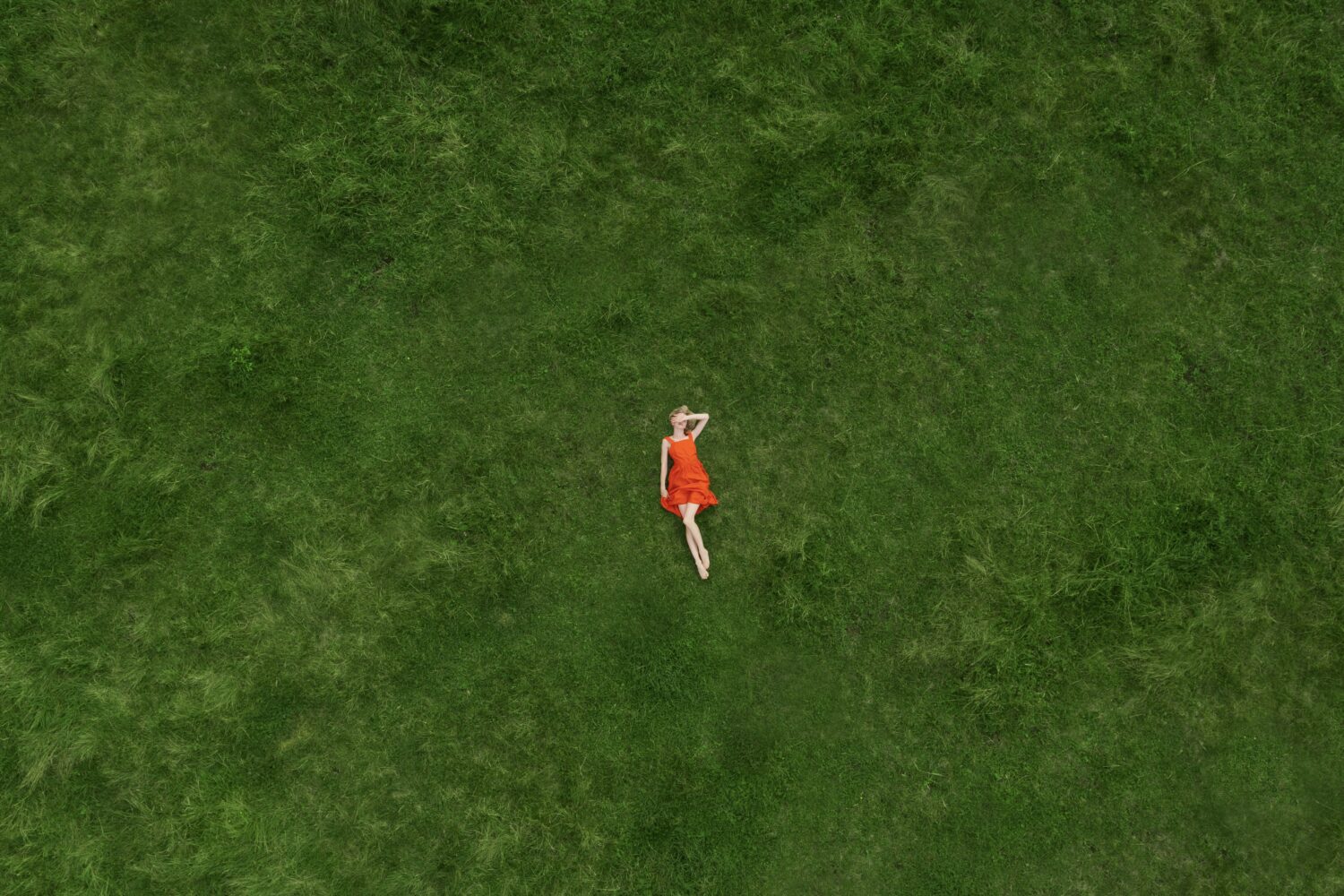

Photograph Credit score: Mike Tomkins

Photographs from smartphone cameras shouldn’t look nearly as good as they do. These cameras have tiny sensors and tiny lens components. It might appear as if the cameras are taking on ever extra space on our telephones, however keep in mind that there are two, three, or extra particular person cameras working in tandem to present you roughly the identical focal vary as an affordable package lens for a DSLR or mirrorless physique. The photographs they produce ought to be small and unexceptional.

And but, smartphone picture high quality is competing with photographs constituted of cameras with bigger sensors and higher glass. How? Utilizing devoted picture processors and a pipeline stuffed filled with machine studying.

Earlier than you even faucet the shutter button on the cellphone display, the digital camera system evaluates the scene and makes decisions based mostly on what it detects, reminiscent of whether or not you’re making a portrait or capturing a panorama. As quickly as you faucet, the digital camera captures a number of photos with totally different publicity and ISO settings inside a couple of milliseconds. It then blends them collectively, making changes based mostly on what it recognized within the scene, maybe punching up the blue saturation and distinction within the sky, including texture to an individual’s hair or clothes, and prioritizing the in-focus captures to freeze transferring topics. It balances the tone and shade and writes the completed picture to reminiscence.

Telephone processors, like these from Apple and Google, include highly effective graphics and machine studying accelerator cores, just like the ‘Neural Engine’ within the A14 chip.

Typically all this processing is obvious, as within the case of individuals’s faces that appear to be pores and skin smoothing has been utilized or evening scenes that appear to be late afternoon. However normally, the result’s remarkably near what you noticed together with your eyes. It’s potential to divorce the processing from the unique picture seize by invoking Uncooked capturing modes or turning to third-party apps. Nonetheless, the default is essentially pushed by machine studying fashions which have been educated on hundreds of thousands of comparable photos with a view to decide which settings to regulate and the way folks and objects “ought to” look.

Folks recognition in software program

Earlier than we took benefit of face recognition in digital camera autofocus techniques, our modifying software program was serving to us discover buddies in our picture libraries. Choosing out faces and folks in photos is a long-solved downside that naturally graduated to figuring out particular people. Now, we don’t suppose twice in Google Photographs, Lightroom, Apple Photographs, or a number of others about with the ability to name up each picture that accommodates a dad or mum or buddy. Not solely can we discover each picture that accommodates Jeremy, we are able to slender the outcomes to point out solely photographs with each Jeremy and Samantha.

Google Photographs recognized all footage containing Larry Carlson, even photographs from when he was youthful.

This know-how isn’t restricted to images. DaVinci Resolve can discover folks in video footage, making it straightforward to find all clips containing a selected actor or the bride at a marriage, for instance.

Individual recognition additionally permits machine learning-based masking options. Once more, as a result of the software program is aware of what a ‘particular person’ appears to be like like, it may possibly assume that an individual outstanding within the body is probably going the topic and make a extra correct choice. It additionally acknowledges facial options, enabling you to create a masks containing only a particular person’s eyes after which apply changes to lighten them and add extra distinction, as an illustration.

The common-or-garden Auto button

At one level, clicking an Auto button in modifying software program was like inserting a guess: the consequence might be a winner or a bust. Now, many automatic-edit controls are based mostly on machine studying fashions. In Lightroom and Lightroom Traditional, for instance, the Auto button within the Edit or Primary panels pings Adobe Sensei (the corporate’s cloud-based processing know-how) and gathers edit settings that match related photos in its database. Is your picture an underexposed wide-angle view of a canyon below cloudy skies? Sensei has seen a number of variations of that and may decide which mixture of publicity, readability, and saturation would enhance your picture.

This unedited uncooked seize might be edited in a number of methods in Lightroom Traditional utilizing mixtures of sliders.

Clicking the Auto button within the Primary panel applies mild and shade changes based mostly on hundreds of thousands of comparable photographs fed into the machine studying fashions that Adobe Sensei makes use of.

Pixelmator Professional and Photomator embody ML buttons to use machine studying to many blocks of controls, together with White Stability and Hue & Saturation, to let the software program take a primary crack at the way it thinks the edited picture ought to seem. Luminar Neo is constructed on high of machine studying know-how, so machine studying touches every part, together with its magical Accent AI slider.

What’s nice about these instruments is that almost all of them will do the give you the results you want, however you’ll be able to then modify the person controls to customise how the picture seems.

Search in lots of apps

One other lesser-known software of machine studying applied sciences in picture software program is object and scene recognition to search out photographs and bypass the necessity to apply key phrases. Though tagging photographs in your library is a robust method to manage them and be capable to shortly find them later, many individuals – superior photographers included – don’t do it.

Apps already know fairly a bit about our photographs due to the metadata saved with them, together with timestamps and site knowledge. However the photos themselves are nonetheless simply plenty of coloured pixels. By scanning photographs within the background or within the cloud, apps can establish issues it acknowledges: skies, mountains, timber, automobiles, winter scenes, seashores, metropolis buildings, and so forth.

When you could discover one thing, reminiscent of photographs you’ve product of farm homes, typing ‘farm home’ into the app’s search discipline will yield photos that embody (or evoke) rural buildings. The outcomes aren’t as focused as when you had tagged them with a “farm home” key phrase, nevertheless it saves a whole lot of time narrowing your search.

A search within the Lightroom desktop app for ‘farm home’ brings up photos that don’t embody these key phrases, based mostly solely on what Adobe Sensei recognized in its scan.

OK, laptop

AI applied sciences aren’t all about artificial imagery with seven-fingered nightmare folks. Even when you resolve to by no means create an AI-generated picture, whenever you seize a comparatively latest digital camera or smartphone or sit right down to most modifying functions, you’ll profit from options educated on machine studying fashions.

In the event that they’re working as supposed, your consideration will likely be centered on making the picture, not the know-how.

[ad_2]

Source link